Deliver

Candidate dashboard

Test-takers can conveniently access their tests and test results through the candidate dashboard.

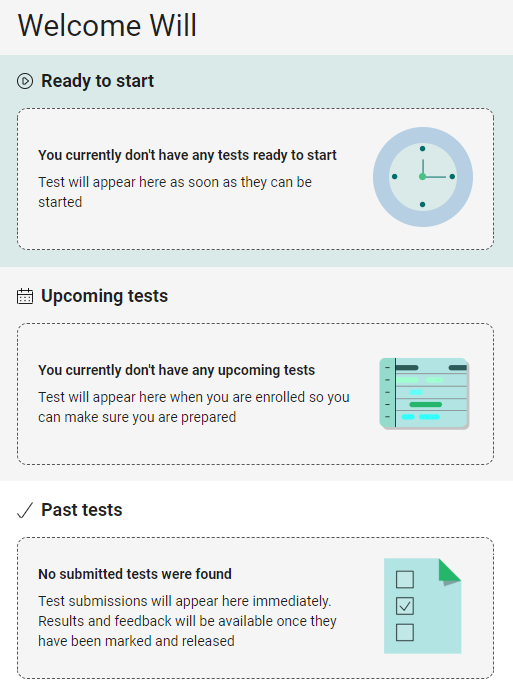

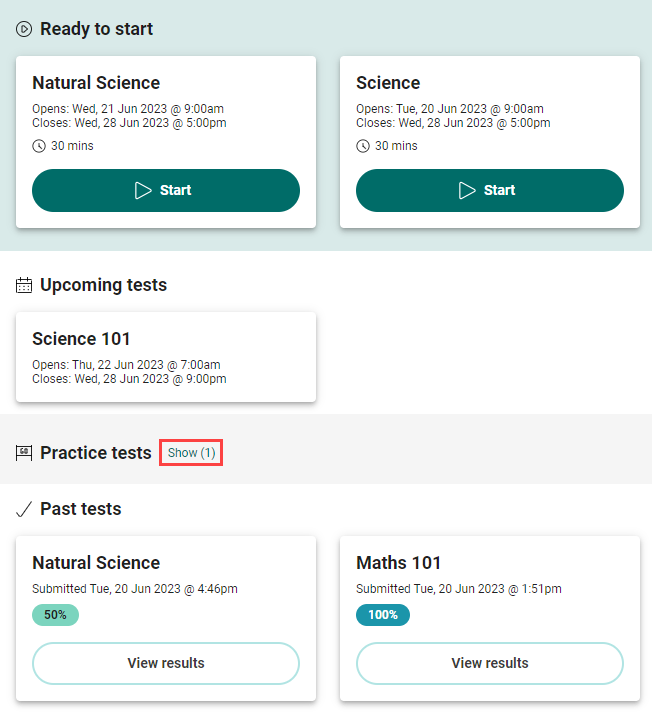

When a test-taker logs into the system, they will see three sections listing their tests; Ready to start, Upcoming tests, and Past tests. If there is a Practice test available, this section displays too. System administrators can configure the dashboard to hide Upcoming, Past and Practice sections.

As test-takers work through their tests, the sections and tiles update to reflect their progress.

Explore the slideshow to view various iterations of the dashboard reflecting the test-takers’ progress at different stages.

This test-taker doesn’t have any tests scheduled and has not taken ay tests.

This test-taker has two tests ready to start, one test scheduled for the future and has taken two tests in the past. A practice test is hidden.

This is the same dashboard as in the previous slide, with the practice test displaying.

Let’s take a closer look at the tiles in each section.

Ready to start

This section lists tests that are available for the test-taker to start, and tests they have started but not submitted.

The name of the test displays at the top of the tile, with timing information below the name. The timing information reflects the Timing Options set by the administrator in the assessment event. If the test has a duration set ![]() , this value displays below the timing options. In these examples the time limit is set to 30 mins.

, this value displays below the timing options. In these examples the time limit is set to 30 mins.

If a test-taker has not started a test, the Start button displays. Once they have started the test, the button adjusts to Resume. When they have completed their test, the tile moves to the Past Tests section.

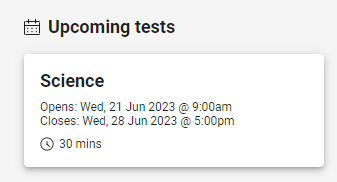

Upcoming tests

This section displays tests that are upcoming. Test-takers cannot start these tests until the open date. On the open date, the tile moves to the Ready to start section.

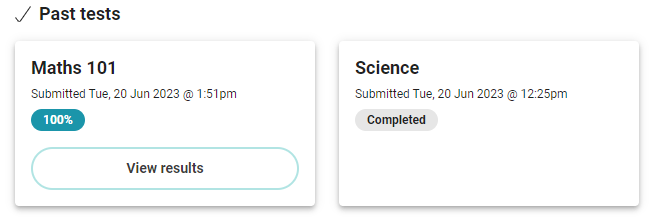

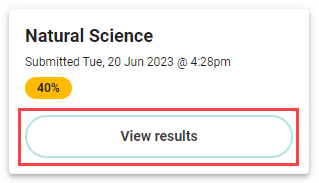

Past tests

This section lists tests that the test-taker has submitted. The tiles list the test name and the submitted date details.

If the test has not been marked, the tile will display as Completed.

Tiles with a test that has been marked will display one of the following results:

![]() 0 – 24% red

0 – 24% red

![]() 25 – 49% amber

25 – 49% amber

![]() 50 – 74% green

50 – 74% green

![]() 51 – 100% blue

51 – 100% blue

Test score band tables

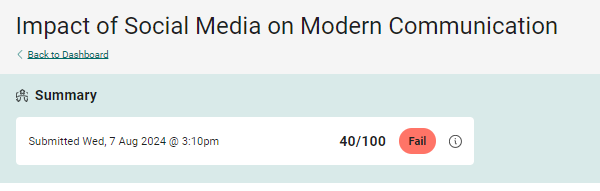

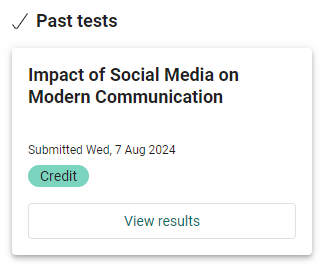

Test score band tables enable an author to assign specific names to ranges of scores on a test. When the system calculates results, the test-taker is assigned a band such as fail, pass, credit, or distinction, rather than a numerical score. These bands display on the test tiles and summary screens of the candidate dashboard. For more information on setting these names up, read about Test score band tables.

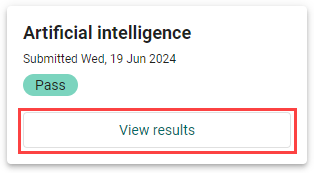

Below are some examples of how these would appear on the Past tests tile and on the summary section of the Results screen.

This is the tile on the Past tests section of the candidate dashboard. Instead of a percentage, the first test score band we set, Fail, displays. Move to the next slide to view the results screen.

When the test-taker selects select View Results on the tile, the feedback screen displays. The test score band displays on the Summary.

This is the second test score band, Pass.

This is the third band score, Credit.

This is the fourth band score, Distinction.

Results and feedback

Test-takers can view their results and feedback by selecting the View results button on the test tile. Administrators can configure the feedback that test-takers receive to be either ‘Formative’ or ‘Detailed’.

Formative feedback can be used for tests which aim to promote and measure test-taker knowledge and skills. Formative feedback allows test-takers to review their tests in detail with the provided feedback for each question. This can be used as an ongoing tool to guide test-takers to better understand the limits of their own knowledge and how to improve. Read more.

Detailed feedback provides an overall evaluation of how well test-takers have done in meeting the learning goals of the assessment. Detailed feedback focuses solely on providing direct feedback against the test-taker response, allowing the ability to hide the specific question details and instead use custom attributes to group or individually display test items i.e. via learning outcome or subject area. Read more.

For instructions on how to switch between these two options, refer to the section on test feedback settings below.

For human marked items, feedback comes from the marker’s comment in the marking project. Read more.

For system marked items, feedback comes from the ‘Feedback and live marking’ section on the item. Read more.

Now, let’s explore the differences between these two types of feedback.

Formative feedback

The View Results button on the tile takes the test-taker to a summary page with three sections.

The Summary section displays the test submission time along with the percentage and score.

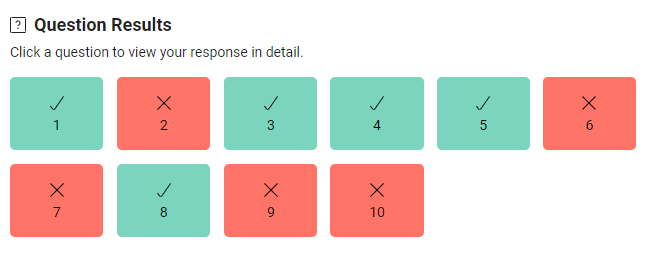

The Question Results section offers a visual summary of the items, indicating the ones that were answered correctly or incorrectly by the test-taker. By clicking on the items, test-takers can access their own responses.

The Detailed Results section offers additional information about the items including the item type and max score. Test-takers can also click the item links to view their responses.

View response in the test player

When a test-taker selects one of the items, it takes them to the test player where they can view their response. The information they can see depends on how the item author configured the feedback in the item. In the examples below, the author included correct and incorrect responses.

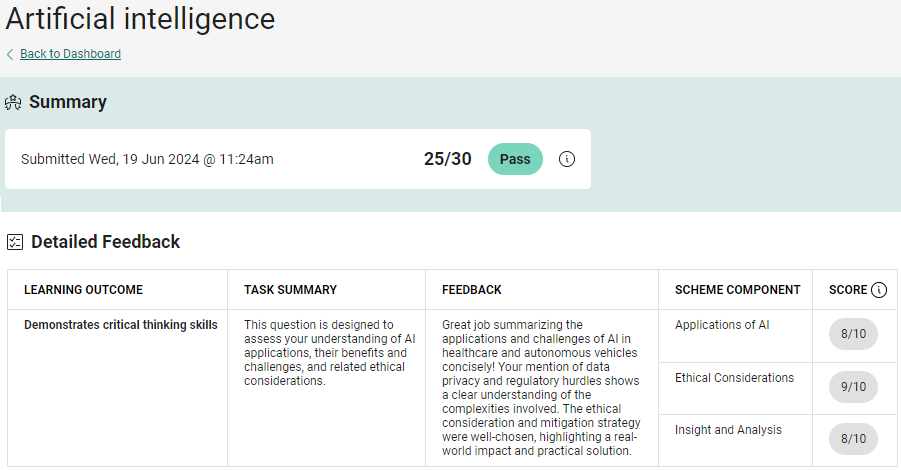

Detailed feedback

Detailed feedback provides an overall evaluation of how well test-takers have done in meeting the learning goals of the assessment. Detailed feedback focuses solely on providing direct feedback against the test-taker response, allowing the ability to hide the specific question details and instead use custom attributes to group or individually display test items i.e. via learning outcome or subject area.

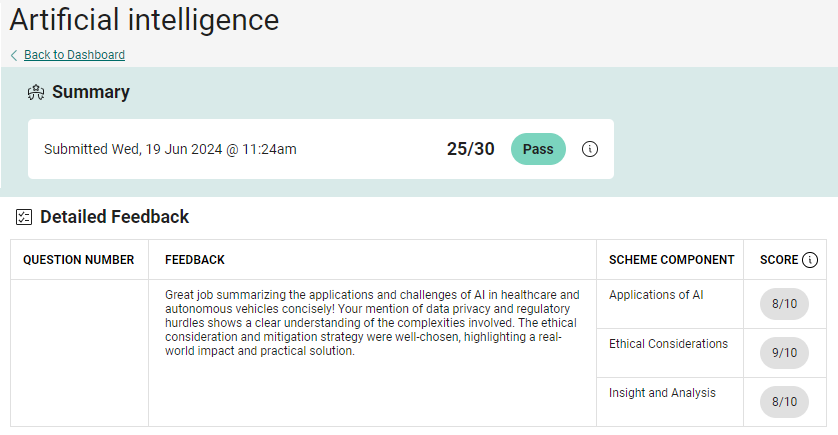

The View Results button on the tile takes the test-taker to a detailed feedback summary screen.

The details that display depend on the item type and the configuration of the item.

Human marked items

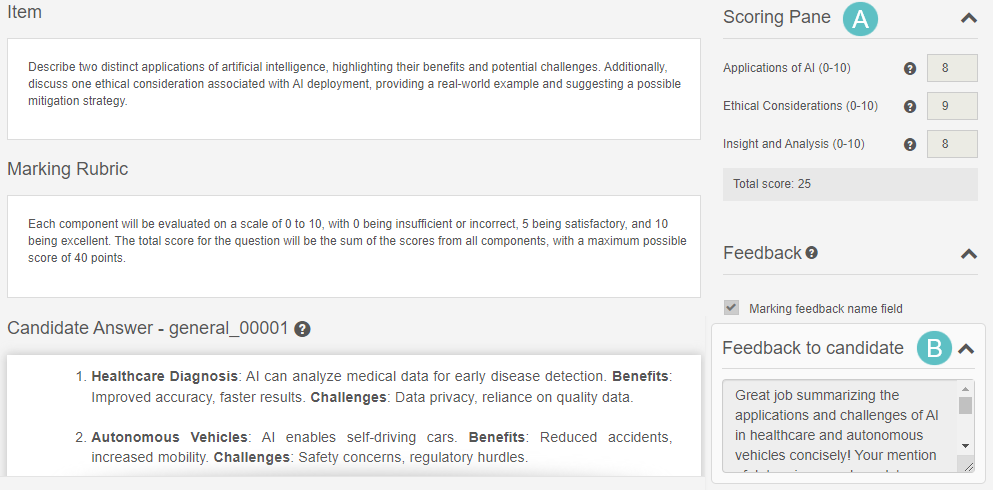

The example below is an extended text item type with three marking scheme components. There is only one item in this test, so the QUESTION NUMBER column is blank. The system populates the FEEDBACK column with the feedback that the marker leaves for the test-taker on the marking screen. The SCHEME COMPONENT and SCORE columns detail the score per component of the item.

Below is the marking screen for the above item:

A. This is the Scoring Pane containing the marking scheme components and the scores.

B. This is the marker feedback left for the test-taker.

System marked items

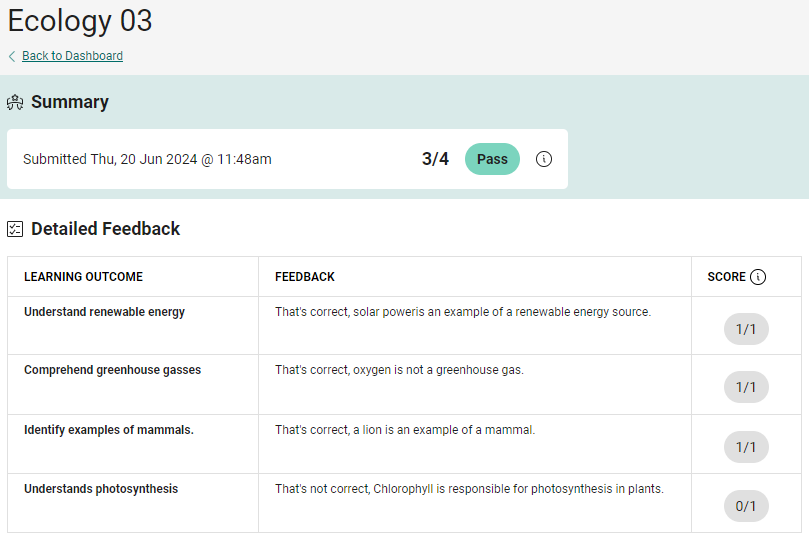

The example below is the detailed feedback for a test with 4 multiple choice items with Correct / Incorrect Response Feedback set. The LEARNING OUTCOME column is a custom attribute we added to the item. We’ve covered this in detail below.

Add columns to detailed feedback

It’s possible to add up to two custom columns to the detailed feedback screen. There are three steps involved to make these columns display.

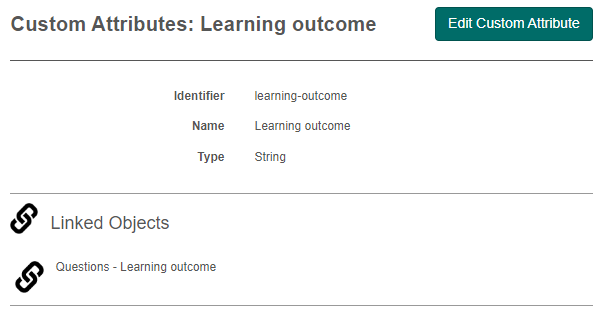

Step one: Create one or two custom attributes as required. Read more about custom attributes. In this example we created two custom attributes of a string (free text) type. One named ‘Learning outcome’ and the other ‘Task summary’.

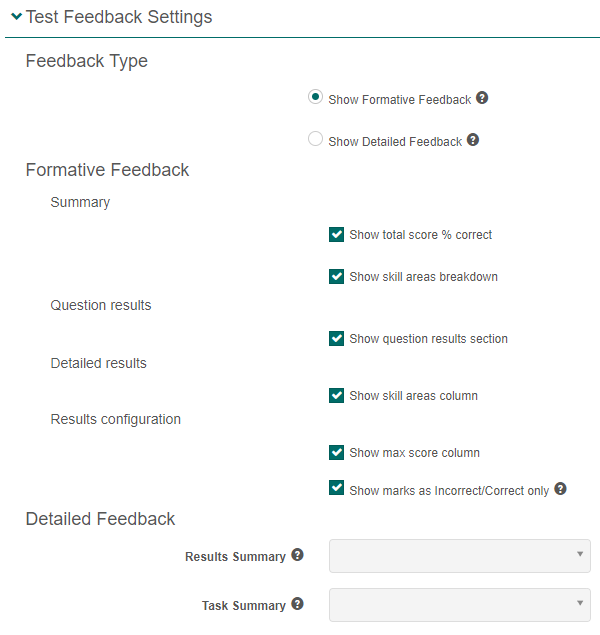

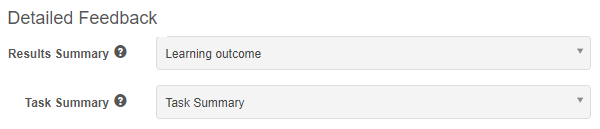

Step two: In Settings > Test Delivery Settings, expand the Test Feedback Settings section, select your custom attributes. You can select just one or both of the options.

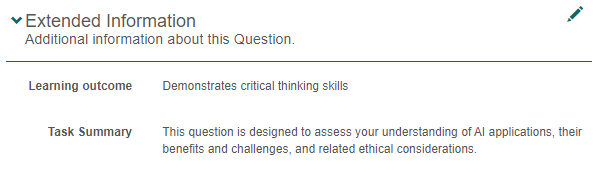

Step three: In the Extended Information expandable section on the item, enter the text relevant to the item.

These columns now display on the detailed feedback page as in the screen capture below.

Settings

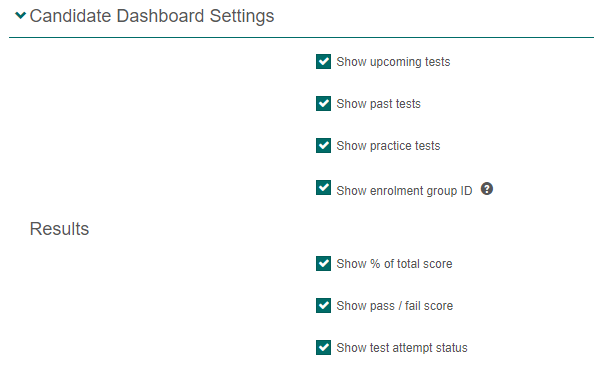

If you’re a system administrator you can alter the features of the dashboard. If you don’t have admin access, contact your account manager at Janison for assistance.

Candidate Dashboard Settings

You can display or hide sections, and set what you want test-takers to see on the results tiles.

Browse to Settings > Test Delivery Settings and open the Candidate Dashboard Settings section. Make the changes as required.

Test Feedback Settings

Browse to Settings > Test Delivery Settings and open the Test Feedback Settings section.

To switch between Feedback Type, either select the formative or the details option. Only the options relevant to the selection you made will be available, the others will become inactive.